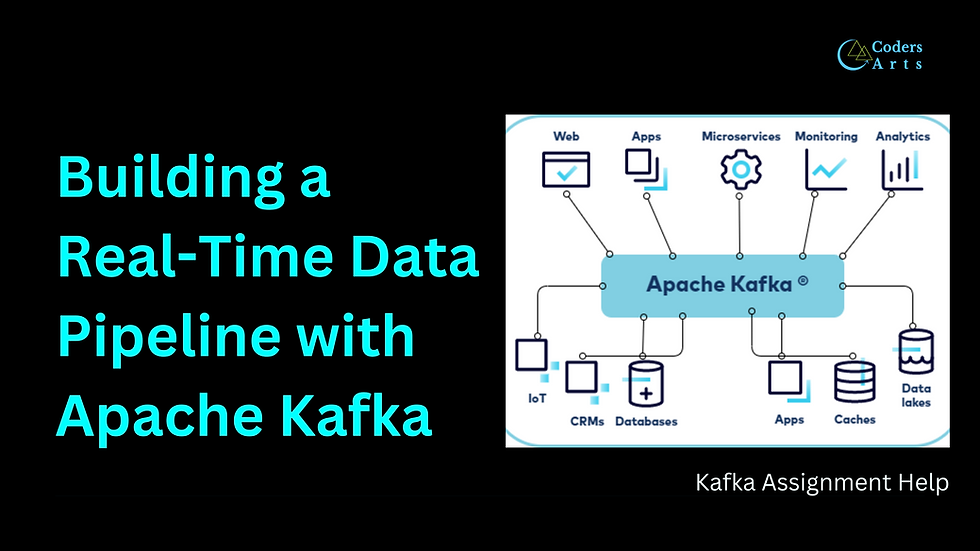

Are you eager to master the art of real-time data processing? Apache Kafka has emerged as a cornerstone technology for building robust data pipelines that handle high-throughput, low-latency data streams. In this comprehensive guide, we'll walk you through creating a real-time data pipeline using Apache Kafka.

This hands-on assignment will deepen your understanding of Kafka's core functionalities and equip you with practical skills applicable in today's data-driven industries.

Introduction

In the era of big data and the Internet of Things (IoT), the ability to process and analyze data in real time is crucial. Sensors and devices generate continuous streams of data that businesses leverage for insights, decision-making, and automation. Apache Kafka, a distributed streaming platform, excels in handling such data streams efficiently.

This guide focuses on building a real-time data pipeline that simulates sensor data, processes it, and stores it for further analysis. By completing this assignment, you'll gain valuable experience in setting up a Kafka cluster, developing producer and consumer applications, and integrating with databases.

Understanding the Objective

What You'll Achieve

Set Up a Kafka Cluster: Learn how to install and configure Apache Kafka on your local machine or a server.

Simulate Sensor Data: Develop a producer application that generates and sends data to Kafka.

Process Data in Real Time: Create a consumer application to process incoming data streams.

Store Processed Data: Integrate Kafka with a relational database to store the processed data.

Implement Logging and Error Handling: Ensure your applications are robust and reliable.

By the end of this assignment, you'll have a functioning real-time data pipeline and a deeper understanding of Kafka's role in modern data architectures.

Assignment Breakdown

Let's dive into the step-by-step process of building your real-time data pipeline.

1. Setting Up a Kafka Cluster

Prerequisites

Java Development Kit (JDK): Kafka requires Java 8 or later.

Apache Kafka Download: Obtain the latest version from the official website.

Installation Steps

Install Java:

Verify Java installation: java -version

If not installed, download and install JDK from Oracle or use a package manager.

Download Kafka:

Extract the downloaded archive to a desired location.

Start ZooKeeper (Kafka uses ZooKeeper for coordination):

bin/zookeeper-server-start.sh config/zookeeper.properties Start Kafka Broker: Open a new terminal and run:

bin/kafka-server-start.sh config/server.propertiesConfiguration Tips

Broker ID: Ensure broker.id is unique if setting up multiple brokers.

Listeners: Configure listeners=PLAINTEXT://:9092 for default port.

Log Directories: Set log.dirs to specify where logs are stored.

2. Simulating Sensor Data

Objective

Create a Producer application that simulates sensor readings and publishes them to a Kafka topic named sensor-data.

Development Steps

Choose a Programming Language:

Python (with kafka-python library)

Java (using Kafka's native client)

Install Dependencies:

Python:

pip install kafka-pythonJava: Include Kafka client dependencies in your pom.xml if using Maven.

Create the Producer Application

Python Example:

from kafka import KafkaProducer

import json

import time

import randomproducer = KafkaProducer(

bootstrap_servers=['localhost:9092'], value_serializer=lambda v: json.dumps(v).encode('utf-8'))while True: sensor_data = { 'sensor_id': random.randint(1, 100), 'temperature': random.uniform(20.0, 30.0), 'humidity': random.uniform(30.0, 50.0), 'timestamp': time.time() } producer.send('sensor-data', sensor_data) print(f"Data sent: {sensor_data}") time.sleep(1)Java Example:

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.util.Properties;

import com.google.gson.Gson;public class SensorDataProducer { public static void main(String[] args) { Properties props = new Properties(); props.put("bootstrap.servers", "localhost:9092"); props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); KafkaProducer<String, String> producer = new KafkaProducer<>(props); Gson gson = new Gson(); while (true) { SensorData data = new SensorData(); String jsonData = gson.toJson(data); producer.send(new ProducerRecord<>("sensor-data", jsonData)); System.out.println("Data sent: " + jsonData); Thread.sleep(1000); } }}Explanation

Data Generation: Randomly generate sensor readings to simulate real-world data.

Serialization: Convert data to JSON format for easy parsing.

Sending Data: Use the send() method to publish messages to the sensor-data topic.

3. Data Processing with a Consumer Application

Objective

Develop a Consumer application that reads data from sensor-data, processes it, and stores it in a database.

Development Steps

Set Up the Consumer

Python Example:

from kafka import KafkaConsumer

import json

consumer = KafkaConsumer( 'sensor-data', bootstrap_servers=['localhost:9092'], value_deserializer=lambda m: json.loads(m.decode('utf-8')))for message in consumer: sensor_data = message.value # Process data hereProcess the Data

Transformation Example:

celsius = sensor_data['temperature']

fahrenheit = (celsius * 9/5) + 32

sensor_data['temperature_f'] = fahrenheit

Set Up the Database

Install MySQL or PostgreSQL.

Create a database and a table to store sensor data.

CREATE TABLE sensor_readings ( sensor_id INT, temperature_c FLOAT, temperature_f FLOAT, humidity FLOAT, timestamp DOUBLE

);Store Data in the Database

Python Example using psycopg2 for PostgreSQL:

import psycopg2

conn = psycopg2.connect("dbname='sensors' user='user' host='localhost' password='password'")cursor = conn.cursor()insert_query = """INSERT INTO sensor_readings (sensor_id, temperature_c, temperature_f, humidity, timestamp)VALUES (%s, %s, %s, %s, %s)"""data_tuple = ( sensor_data['sensor_id'], sensor_data['temperature'], sensor_data['temperature_f'], sensor_data['humidity'], sensor_data['timestamp'])cursor.execute(insert_query, data_tuple)

conn.commit()Explanation

Deserialization: Convert JSON strings back into Python dictionaries or Java objects.

Data Transformation: Apply necessary computations, such as unit conversions.

Database Insertion: Use parameterized queries to insert data securely.

4. Implementing Logging and Error Handling

Logging

Use the logging module in Python or Log4j in Java.

Log key events such as successful data processing and any exceptions.

import logging

logging.basicConfig(level=logging.INFO)

logging.info('Data processed successfully.')

Error Handling

Use try-except blocks to catch exceptions.

try:

# Code that might raise an exception

except Exception as e:

logging.error(f"Error occurred: {e}")

Best Practices

Granular Logging: Differentiate between debug, info, warning, error, and critical logs.

Exception Handling: Handle specific exceptions where possible.

5. Running and Testing Your Pipeline

Start Kafka and ZooKeeper if not already running.

Run the Producer to start sending data.

Run the Consumer to process and store data.

Monitor the Database to verify that data is being stored correctly.

Check Logs for any errors or warnings.

Common Challenges

Data Serialization Issues

Problem: Mismatch in data formats between producer and consumer.

Solution: Ensure consistent serialization/deserialization methods are used.

Handling Large Data Volumes

Problem: Consumer lags behind due to high data throughput.

Solution: Optimize consumer processing time or scale out consumers.

Offset Management

Problem: Reprocessing or missing messages due to incorrect offset handling.

Solution: Understand Kafka's offset management and commit strategies.

Database Bottlenecks

Problem: Slow database writes causing backpressure.

Solution: Use batch inserts or implement asynchronous database operations.

Expert Tips

Optimize Producer and Consumer Configurations

Batch Size: Adjust batch.size and linger.ms for the producer to optimize throughput.

Consumer Groups: Utilize consumer groups to scale out processing.

Implement Schema Validation

Use Apache Avro or JSON Schema to enforce data structure consistency.

Secure Your Kafka Cluster

Implement authentication and authorization using SSL and SASL.

Monitor Performance

Use tools like Kafka Manager or Confluent Control Center for monitoring.

How Codersarts Can Assist

At Codersarts, we understand that building a real-time data pipeline can be complex and challenging. Our team of experts is here to provide you with:

Personalized Guidance: One-on-one tutoring to help you understand each component.

Code Review: Expert feedback on your code to improve efficiency and reliability.

Troubleshooting Support: Assistance in resolving any issues you encounter.

Advanced Insights: Learn best practices and advanced techniques from industry professionals.

Conclusion

Building a real-time data pipeline with Apache Kafka is a valuable skill in today's data-centric world. This assignment not only enhances your understanding of Kafka but also equips you with practical experience in data processing and integration. By following this guide, you've set up a functional pipeline that simulates sensor data, processes it in real time, and stores it for future analysis.

Feeling overwhelmed or need expert assistance? Codersarts is here to help you excel in your Kafka assignments and projects. Our professionals are ready to guide you every step of the way.

Get Started Today!

Tags: Kafka assignment help, real-time data pipeline, Apache Kafka tutorial, Kafka Producer and Consumer, streaming data processing, Kafka expert assistance

Comments