Fine-Tuning a Pre-Trained Model for Research Paper Title Generation

- Codersarts

- Aug 21, 2024

- 4 min read

Project Overview

The goal of this project is to build a pipeline that extracts titles and abstracts from research papers, formats the dataset for use with Hugging Face, and fine-tunes a pre-trained model to automatically generate research paper titles from abstracts. The project will involve several stages, including data extraction, dataset preparation, model loading, tokenization, baseline generation, and model fine-tuning using Low-Rank Adaptation (LoRA).

Objectives

Data Extraction: Extract titles and abstracts from research papers available online.

Dataset Preparation: Format the extracted data into a Hugging Face dataset.

Model Baseline: Use a pre-trained model to generate titles from abstracts as a baseline.

Fine-Tuning: Fine-tune the pre-trained model using LoRA to improve the title generation based on the training dataset.

Evaluation: Compare the titles generated by the fine-tuned model with the baseline results to assess improvements

Technical Stack

Programming Language: Python

Libraries and Tools:

Scrapers: openreview_scraper, arxiv-miner

Hugging Face: datasets, transformers

Model Fine-Tuning: LoRA (Low-Rank Adaptation)

Model Training & Evaluation: PyTorch or TensorFlow

Version Control: GitHub

Project Phases

Phase 1: Data Extraction and Dataset Preparation

Task 1.1: Data Acquisition and Preprocessing:

Data Source Selection:

Identify relevant online websites with research papers (e.g., arXiv, ACM Digital Library).

Consider legal restrictions and terms of service for data scraping.

Data Scraping:

Utilize the openreview_scraper library (https://github.com/pranftw/openreview_scraper) to efficiently extract titles and abstracts from chosen websites.

Implement robust error handling to manage potential website changes or inconsistencies.

Data Cleaning:

Remove irrelevant data (HTML tags, extra whitespaces, etc.).

Standardize text format (lowercase, punctuation).

Use regular expressions or string manipulation techniques for cleaning.

Data Formatting:

Convert the cleaned data into a dictionary format compatible with Hugging Face Dataset.from_dict.

Each dictionary entry should include "title" and "abstract" keys with corresponding values.

Task 1.2: Hugging Face Dataset Creation

Import the datasets library from Hugging Face (https://huggingface.co/docs/datasets/en/create_dataset).

Utilize the Dataset.from_dict function to create a Hugging Face dataset from the processed dictionary data.

Consider splitting the dataset into training, validation, and testing sets for model evaluation later.

Phase 2: Baseline Model Implementation

Task 2.1: Pre-Trained Model Selection and Loading

Investigate pre-trained models suitable for text generation tasks, such as BART, T5, or GPT-2.

Research the pre-training process used for the chosen model, delving into techniques like masked language modeling or denoising autoencoders that contribute to its ability to generate text.

Download the pre-trained model weights from the Hugging Face model hub (https://huggingface.co/docs/transformers/en/index).

Use the AutoModelForSeq2SeqLM class from the Hugging Face Transformers library to load the pre-trained model.

Task 2.2: Model Tokenization

Load the tokenizer associated with the pre-trained model using the AutoTokenizer class.

Tokenize the title and abstract pairs in your dataset using the loaded tokenizer. This converts text into numerical representations suitable for model input.

Task 2.3: Baseline Generation

Feed the tokenized abstracts into the pre-trained model using the generate function.

Ensure the model is configured for generating titles based on abstracts.

Evaluate the generated titles qualitatively and quantitatively (e.g., BLEU score) to assess the pre-trained model's baseline performance.

Phase 3: Model Fine-Tuning with LoRA

Task 3.1: Fine-Tuning with LORA

Define a custom training loop:

Split the dataset into training and validation sets (if not already done in data formatting).

Create an optimizer (e.g., Adam) and a loss function (e.g., cross-entropy) suitable for text generation tasks.

Implement LORA by defining a separate learning rate for each layer of the model using techniques like model slicing or explicit weights tracking.

Train the model on the training data using the defined training loop.

Monitor performance on the validation set to prevent overfitting.

Adjust hyperparameters (learning rates, batch sizes, etc.) for optimal performance.

Task 3.2: Generate and Evaluate Fine-Tuned Titles

Use the fine-tuned model to generate titles from abstracts in the test dataset.

Compare these generated titles with the baseline titles to evaluate improvements.

Evaluation Criteria

Accuracy of Title Generation:

Compare the accuracy of titles generated by the baseline model versus the fine-tuned model.

Use evaluation metrics such as BLEU, ROUGE, or custom metrics based on human judgment.

Model Efficiency:

Assess the computational efficiency of the fine-tuning process.

Evaluate the model’s performance in terms of inference speed and resource usage.

Deliverables

Formatted Dataset: A well-structured Hugging Face dataset containing titles and abstracts.

Baseline Model Outputs: Titles generated by the pre-trained model before fine-tuning.

Fine-Tuned Model Outputs: Titles generated after fine-tuning with LoRA.

Evaluation Report: A detailed report comparing the baseline and fine-tuned results, including performance metrics and observations.

This project not only allows for a hands-on experience with cutting-edge NLP techniques but also contributes to the broader goal of automating the research process. If additional iterations or model experiments are needed beyond the specified scope, they can be discussed with additional time and cost adjustments.

Need a Custom Research Paper Title Generation System?

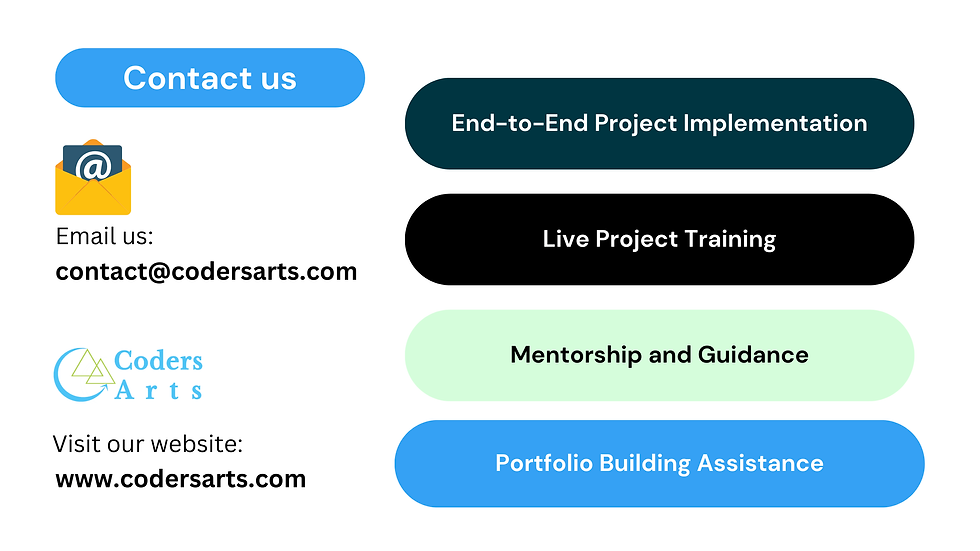

At Codersarts, we specialize in delivering tailored solutions for complex machine learning and NLP projects like this one. Whether you need end-to-end implementation, custom model fine-tuning, or expert consulting, our team of experienced developers and data scientists is here to help. From dataset creation to deploying state-of-the-art models, we offer comprehensive support at every stage of your project. Our team of experienced deep learning experts can:

Extract titles and abstracts from research papers on any online platform.

Create high-quality Hugging Face datasets for your project.

Fine-tune pre-trained models using LORA for optimal performance.

Generate novel research paper titles based on abstracts

Let us bring your ideas to life! Contact Codersarts today to discuss how we can assist you in achieving your project goals. Whether it's additional iterations, fine-tuning, or a custom solution, we're here to provide the expertise you need. Let's start building something extraordinary together!

Contact us today for a free consultation and learn how we can help you achieve your goals.

Comments