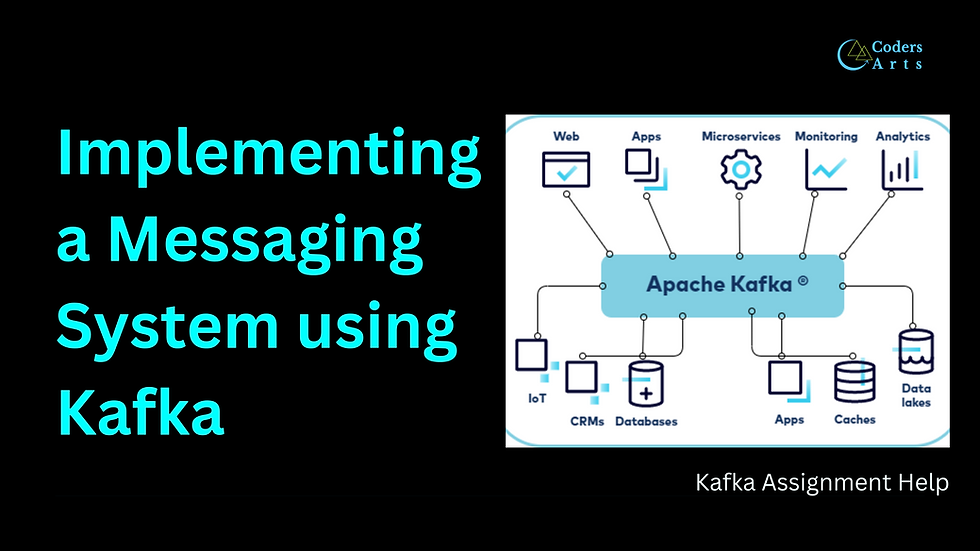

Are you looking to enhance your skills in building scalable and reliable messaging systems? Apache Kafka is a powerful distributed streaming platform that excels in handling real-time data feeds with high throughput and low latency. In this assignment.

We'll guide you through the process of building a messaging system using Kafka to enable communication between microservices in a distributed application.

Introduction

In modern software architecture, microservices have become a standard approach for building large-scale applications. They allow for modular development and deployment, making systems more scalable and maintainable. However, communication between these microservices can be complex. This is where a robust messaging system comes into play.

Apache Kafka provides a fault-tolerant, scalable, and high-throughput messaging system. By leveraging Kafka, you can ensure reliable communication between microservices, handle failures gracefully, and scale your application horizontally to meet increasing workloads.

Assignment Objectives

Goals

Publish and Subscribe: Implement a system where microservices can publish messages to topics and subscribe to topics of interest.

Ensure Reliability: Guarantee message delivery and handle failures gracefully using Kafka's features.

Scale Horizontally: Utilize Kafka's replication and partitioning to scale the messaging system and handle increasing workloads.

Expected Outcomes

Gain practical experience with Kafka producers and consumers.

Understand reliable messaging patterns like at-least-once and at-most-once delivery.

Learn how to leverage Kafka's replication and partitioning for scalability and fault tolerance.

Step-by-Step Guide

1. Environment Setup

Prerequisites

Java Development Kit (JDK): Version 8 or later.

Apache Kafka: Latest stable version.

Apache ZooKeeper: Comes bundled with Kafka for coordination.

Programming Language: Java, Python, or any language with Kafka client support.

Installing Apache Kafka

Download Kafka from the official website.

Extract Files: Unzip the downloaded archive to a preferred directory.

Start ZooKeeper:

bin/zookeeper-server-start.sh config/zookeeper.propertiesStart Kafka Broker:

bin/kafka-server-start.sh config/server.properties2. Designing the Messaging System

Architecture Overview

Producers: Microservices that publish messages to Kafka topics.

Consumers: Microservices that subscribe to Kafka topics to receive messages.

Topics: Categories or feed names to which messages are published.

Partitions: Subdivisions of topics to enable parallelism.

Replication: Duplication of partitions across brokers for fault tolerance.

Creating Kafka Topics

Create topics with partitions and replication factors to ensure scalability and reliability.

bin/kafka-topics.sh --create --topic microservice-communication --bootstrap-server localhost:9092 --replication-factor 2 --partitions 3

3. Implementing Producers and Consumers

Producer Implementation

Java Example

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.util.Properties;

public class MicroserviceProducer {

public static void main(String[] args) { Properties props = new Properties(); props.put("bootstrap.servers", "localhost:9092"); props.put("acks", "all"); // Ensures message delivery props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); KafkaProducer<String, String> producer = new KafkaProducer<>(props); String topic = "microservice-communication"; String message = "Hello from Producer"; producer.send(new ProducerRecord<>(topic, message)); producer.close(); }}Explanation

Bootstrap Servers: Specifies Kafka brokers.

Acks: Set to "all" for strongest delivery guarantee (at-least-once).

Serializers: Serialize keys and values for transmission.

Consumer Implementation

Java Example

import org.apache.kafka.clients.consumer.ConsumerRecords;import org.apache.kafka.clients.consumer.KafkaConsumer;import java.util.Collections;import java.util.Properties;public class MicroserviceConsumer { public static void main(String[] args) { Properties props = new Properties(); props.put("bootstrap.servers", "localhost:9092"); props.put("group.id", "microservice-group"); props.put("enable.auto.commit", "true"); // Automatic offset committing props.put("auto.commit.interval.ms", "1000"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props); consumer.subscribe(Collections.singletonList("microservice-communication")); while (true) { ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100)); records.forEach(record -> { System.out.printf("Received message: %s%n", record.value()); }); } }}Explanation

Group ID: Identifies the consumer group for load balancing.

Enable Auto Commit: Automatically commits offsets at intervals.

Deserializers: Deserialize received messages.

4. Implementing Reliable Messaging Patterns

At-Least-Once Delivery

Producer Side:

Set acks to "all".

Implement retry logic with retries property.

Example:

props.put("acks", "all"); props.put("retries", 3);Consumer Side:

Use manual offset commits to control when messages are marked as consumed.

Set enable.auto.commit to false:

props.put("enable.auto.commit", "false");Commit offsets after processing:

consumer.commitSync();At-Most-Once Delivery

Producer Side:

Set acks to "0" or "1".

Minimal retries or none.

Consumer Side:

Automatic offset commits.

Less reliable but faster processing.

Exactly-Once Delivery (Advanced)

Requires idempotent producers and transactional writes.

Set enable.idempotence to true (Kafka version 0.11+).

props.put("enable.idempotence", "true");5. Leveraging Kafka's Replication and Partitioning

Replication for Fault Tolerance

Replication Factor: Number of copies of data across brokers.

Ensure critical topics have a replication factor greater than 1.

Broker Failures: Kafka automatically switches to a replica if the leader broker fails.

Partitioning for Scalability

Parallel Processing: Partitions allow consumers to read from different partitions in parallel.

Consumer Groups: Distribute load among multiple consumer instances.

Assign Partitions: Kafka handles partition assignment automatically within a consumer group.

6. Handling Failures Gracefully

Producer Retries and Timeouts

Retries: Configure the number of retries for failed send attempts.

props.put("retries", 3);Retry Backoff: Time to wait between retries.

props.put("retry.backoff.ms", 100);Consumer Error Handling

Deserialization Errors: Handle cases where messages cannot be deserialized.

Processing Exceptions: Wrap processing logic in try-catch blocks.

try { // Message processing logic} catch (Exception e) { // Handle exception}Graceful Shutdown

Ensure producers and consumers close connections properly.

Use shutdown hooks to manage resource cleanup.

7. Scaling the Messaging System

Adding More Brokers

Scale horizontally by adding more Kafka brokers to the cluster.

Update bootstrap.servers to include new brokers.

Increasing Partitions

More partitions allow for greater parallelism.

Increase partitions for topics to distribute load.

bin/kafka-topics.sh --alter --topic microservice-communication --partitions 6 --bootstrap-server localhost:9092Scaling Consumers

Add more consumer instances within the same consumer group.

Kafka will reassign partitions among consumers.

8. Testing the Messaging System

Simulate Microservices

Create multiple producer and consumer instances to simulate microservices.

Test message flow and ensure all messages are delivered and processed.

Failure Scenarios

Broker Failure: Stop a broker and observe failover.

Consumer Failure: Terminate a consumer and see if others take over partitions.

Network Issues: Simulate network latency or partitioning.

9. Monitoring and Metrics

Kafka Monitoring Tools

Kafka Manager: Web-based tool for managing Kafka clusters.

Prometheus and Grafana: For collecting and visualizing metrics.

JMX Metrics: Kafka exposes metrics via Java Management Extensions (JMX).

Key Metrics to Monitor

Producer Metrics: Record send rate, retries, and errors.

Consumer Metrics: Monitor lag, processing rate, and commit offsets.

Broker Metrics: Keep an eye on disk usage, network I/O, and request handling.

Challenges and Expert Solutions

Message Ordering

Challenge: Ensuring messages are processed in order.

Solution: Use the same key for messages that need ordering, so they go to the same partition.

Data Serialization Formats

Challenge: Handling different data formats between microservices.

Solution: Use a common serialization format like Apache Avro or JSON Schema with a schema registry.

Security Concerns

Challenge: Securing communication between microservices.

Solution:

Enable SSL/TLS encryption.

Use SASL for authentication.

Implement Access Control Lists (ACLs) for authorization.

How Codersarts Can Help

At Codersarts, we understand that building a scalable and reliable messaging system can be challenging. Our team of experts is ready to assist you with:

Our Services

Assignment Assistance: Get help with implementing Kafka producers and consumers.

Code Review: Receive feedback on your code to improve efficiency and reliability.

Scalability Solutions: Learn how to scale your Kafka cluster and optimize performance.

Fault Tolerance Strategies: Get guidance on implementing replication and handling failures.

One-on-One Tutoring: Personalized sessions to deepen your understanding of Kafka.

Why Choose Codersarts

Industry Experts: Work with professionals experienced in distributed systems and microservices.

Customized Solutions: Tailored assistance to meet your specific assignment requirements.

Timely Support: Quick turnaround to help you meet your deadlines.

24/7 Availability: We're here to help whenever you need us.

Conclusion

Implementing a messaging system using Apache Kafka empowers you to build robust, scalable, and reliable microservices architectures. By mastering Kafka's producers, consumers, and its powerful features like replication and partitioning, you can ensure efficient communication between microservices while handling increasing workloads and failures gracefully.

This assignment provides practical experience that is highly valuable in today's tech landscape, where real-time data processing and microservices are the norms.

Ready to excel in building messaging systems with Kafka? If you need expert guidance or assistance with your assignment, Codersarts is here to help you achieve your goals.

Get Expert Help Today!

Keywords: Kafka messaging system, microservices communication, reliable messaging, Kafka producers and consumers, Kafka scalability, Apache Kafka assignments

Meta Description: Build a scalable and reliable messaging system using Apache Kafka to enable communication between microservices. This guide covers publishing and subscribing, ensuring reliability, and scaling horizontally. Get expert assistance from Codersarts.

Comments