Implementing a Transformer Language Model Help

- Codersarts

- Nov 2, 2024

- 6 min read

Hello Everyone, Welcome to Codersarts!

In this blog, we’ll explore the step-by-step process of implementing a Transformer language model. Whether you're a student working on assignments or a developer looking to deepen your understanding, this guide will walk you through the key concepts and hands-on tasks involved in building Transformer-based models.

We've structured the content to function like assignment tasks with clear instructions, enabling you to implement and test the model as a proof of concept. This approach not only ensures you gain practical experience but also helps reinforce your understanding of self-attention mechanisms, multi-head attention, and positional encoding — all critical components of Transformer architectures.

By the end of this tutorial, you’ll be equipped with the knowledge to:

Build a Transformer model from scratch using frameworks like PyTorch or TensorFlow.

Train the model on language modeling tasks with appropriate datasets.

Apply fine-tuning techniques to improve model performance.

Solve common challenges related to training dynamics, attention issues, and GPU setup.

So, whether you’re here for assignment help, troubleshooting advice, or project support, this blog will provide everything you need to succeed in Transformer-based NLP tasks.

This assignment will help you gain hands-on experience with Transformer models by implementing character-based Transformers for sequence prediction and language modeling tasks. You will build a simplified Transformer from scratch, experiment with attention mechanisms, and implement language modeling techniques using Python and PyTorch.

Goals

Understand self-attention mechanisms in Transformers.

Implement a basic Transformer encoder without relying on pre-built libraries.

Use transformer-based architectures for language modeling tasks.

Evaluate the perplexity and accuracy of the implemented model.

Tools and Dataset

Use Python and PyTorch (latest versions).

The dataset is a character-based subset of Wikipedia’s first 100M characters, processed to use 27 character types.

Use the provided utils.py and lettercounting.py files for setting up tasks.

Part 1: Build a Transformer Encoder (50 points)

Implement a simplified Transformer encoder from scratch for a character-sequence prediction task. Your task is to predict how many times each character in a sequence has occurred previously. You will learn to build a single-head attention mechanism and residual connections.

Steps to Implement:

Prepare the Transformer Layer:

Build query, key, and value matrices using linear layers.

Use matrix multiplication for self-attention.

Add residual connections and apply non-linearity (ReLU).

Train the Transformer Encoder:

Train the model using a 20-character sequence input.

Use cross-entropy loss to compute the prediction loss for all positions in the sequence.

Achieve at least 85% accuracy on the task.

Run the Model:

Use the following command to execute your implementation:

python letter_counting.py --task BEFOREAFTERPart 2: Transformer for Language Modeling (50 points)

In this part, you will extend the Transformer architecture to build a language model capable of predicting the next character in a sequence.

Implementation Steps:

Use Positional Encodings:

Implement positional embeddings to handle sequence ordering.

Use causal masking to prevent tokens from attending to future tokens.

Train the Language Model:

Train the model on the first 100,000 characters of Wikipedia.

Evaluate the model on the development set and achieve a perplexity below 7.

Run the Model:Use the following command to train the language model:

python lm.py

Optimization Tip:Implement multi-head attention to improve model performance and explore the attention patterns to understand how your model makes predictions.

Deliverables and Submission Guidelines

Submit your code files for Parts 1 and 2.

Your implementation should pass sanity checks using the following commands:

python letter_counting.py

python lm.py --model NEURAL

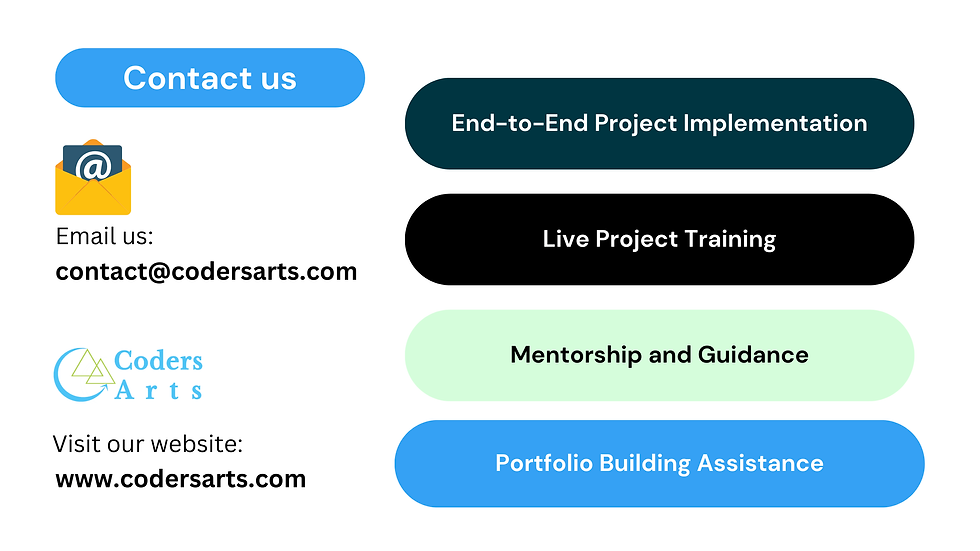

Need help with Transformer models or language modeling assignments? Codersarts offers AI/ML assignment helpwith expert guidance for building self-attention mechanisms, language models, and implementing Transformer encoders from scratch.

Relevant Services Offered by Codersarts

AI assignment help for Transformer language modeling and NLP projects

Python code implementation for neural networks and deep learning tasks

Support for PyTorch-based Transformer models

Expert guidance for NLP assignments using Hugging Face transformers

1:1 mentoring sessions to debug and improve your code

Comprehensive help with language model evaluation and error analysis

Keywords: Transformer language modeling assignment help, AI assignment help for Transformer encoders, NLP project help using Hugging Face and PyTorch, Character-level language modeling using Transformers, Codersarts AI/ML services for students, Error analysis and language model optimization

How Codersarts Helps with Implementing a Transformer Language Model

Based on user demand, emerging trends, and future prospects in AI and NLP, Codersarts has identified the growing need for specialized support in Transformer-based projects. Transformer architectures are at the core of many cutting-edge NLP applications, including language modeling, text generation, and machine translation. As more students, developers, and businesses adopt these technologies, the demand for practical, hands-on guidance continues to rise.

Codersarts recognizes that many users seek coding support, troubleshooting assistance, and assignment help to build, train, and fine-tune Transformer models efficiently. With Transformers evolving into industry standards for NLP tasks, it's crucial to offer services that not only address academic challenges but also empower developers to leverage these models for real-world applications.

Our tailored services meet these needs by providing expert mentoring, code debugging, project optimization, and troubleshooting support. Whether students need help completing assignments or developers seek guidance to deploy Transformer models, Codersarts ensures comprehensive solutions, aligning with current educational trends and technical requirements.

Step-by-Step Tutorials and Practical Guidance

Many users search for detailed tutorials with code examples on Transformer models using Python, PyTorch, TensorFlow, and Hugging Face libraries. At Codersarts, we provide:

Hands-on coding guidance to implement self-attention mechanisms and positional encodings.

In-depth tutorials for building language models from scratch, designed to help you master Transformer architectures.

Academic and Assignment Help for Students

Students often need help completing coursework and assignments involving Transformer models. Codersarts offers:

Assignment solutions with personalized mentoring on multi-head attention, hyperparameter tuning, and language modeling tasks.

Debugging support to resolve issues in Transformer-based assignments and ensure high-quality project submissions

Developer Support for Real-World Projects

Developers working on text generation, machine translation, or sentiment analysis projects often require detailed explanations and technical support. Codersarts provides:

One-on-one mentoring for developers to optimize model performance and adjust architectures for specific tasks.

Help with using pre-trained Transformer models and integrating NLP libraries like Hugging Face Transformers

Troubleshooting and Optimization for Transformer ModelsMany users face challenges related to GPU setup, gradient calculation errors, or training stability. Codersarts offers:

Performance optimization support, helping you reduce perplexity and improve model accuracy.

Debugging sessions to identify bottlenecks in your Transformer architecture and ensure smooth training

Integration with NLP Tools and Libraries

Codersarts helps developers integrate pre-trained models and use frameworks like Hugging Face to streamline Transformer implementation for tasks beyond academic use. Our experts ensure seamless deployment of your models for business applications and AI-powered solutions.

Code Examples and Customized Implementation

Our team offers ready-to-use code snippets and personalized coding support for implementing critical components like:

Self-attention mechanisms

Transformer encoders and decoders

Hyperparameter tuning for model optimization

Whether you are training a Transformer from scratch or fine-tuning a pre-trained model, our mentors guide you through every step

.

Practical Application and Troubleshooting

For students and developers working on specific NLP tasks such as text generation, machine translation, or speech recognition, Codersarts provides:

Hands-on help with building and evaluating language models.

Debugging assistance for issues like training instability, attention-related problems, or GPU configuration errors

Best practices for optimizing model performance and reducing metrics like perplexity during training.

Assignment Help and AI Project Mentorship

Codersarts specializes in helping students complete their AI/ML assignments on time with well-documented code and project reports. Services include:

1:1 mentoring sessions to help you better understand concepts like self-attention and sequence prediction models.

Project reviews and debugging support to identify bottlenecks and improve model accuracy.

Get Help with Your Transformer Model Today!

Codersarts bridges the gap between theory and practical implementation, providing personalized support to meet your academic and professional goals. Whether you need assignment help or hands-on project assistance, Codersarts ensures you excel in NLP and AI projects involving Transformer models.

Why Choose Codersarts for Transformer Model Help?

Dedicated Experts: Work with professionals experienced in Transformer architectures and NLP techniques.

Tailored Solutions: Receive support customized to your project or assignment needs.

Timely Delivery: Get quick help to meet academic deadlines.

Troubleshooting and Debugging: Solve complex coding issues with ease.

Codersarts provides exactly what students and developers search for—comprehensive tutorials, coding support, and debugging assistance—making it the perfect partner for mastering Transformer models.

Discover more about the Transformer Language Model.

Comments