Implementing Kafka Streams for Real-Time Analytics

- Codersarts

- Oct 22, 2024

- 5 min read

Are you ready to take your data processing skills to the next level? Real-time analytics has become a cornerstone in today's data-driven landscape, enabling businesses to make swift, informed decisions. Apache Kafka Streams is a powerful tool that simplifies building real-time applications and microservices.

In this comprehensive guide, we'll walk you through an assignment that will help you master real-time analytics using Kafka Streams.

Introduction

In an era where data is generated at an unprecedented rate, the ability to process and analyze information in real time is invaluable. From financial markets to social media trends, real-time analytics allows organizations to respond promptly to emerging patterns and anomalies.

Kafka Streams is a lightweight, easy-to-use library for building robust stream processing applications. It leverages the scalability and fault tolerance of Apache Kafka, making it an ideal choice for real-time analytics tasks.

Assignment Overview

Objective

The goal of this assignment is to implement a real-time analytics application using Kafka Streams. You will:

Simulate a data stream of numerical values (e.g., stock prices).

Compute rolling averages over a specified time window.

Detect anomalies based on deviations from the rolling average.

Write the processed data and alerts back to Kafka topics.

Expected Outcomes

By completing this assignment, you will:

Gain hands-on experience with Kafka Streams API.

Understand how to perform stateful stream processing.

Learn to compute windowed aggregations.

Implement real-time anomaly detection logic.

Enhance your skills in building scalable, real-time applications.

Detailed Assignment Steps

1. Kafka Setup Review

Before diving into the application development, ensure that your Kafka environment is correctly set up.

Kafka Installation

Prerequisites: Java 8 or later.

Download Kafka: Obtain the latest Kafka binaries from the official website.

Start ZooKeeper:

bin/zookeeper-server-start.sh config/zookeeper.propertiesStart Kafka Broker:

bin/kafka-server-start.sh config/server.propertiesCreate Kafka Topics

Input Topic (raw-data):

bin/kafka-topics.sh --create --topic raw-data --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1Output Topic (processed-data):

bin/kafka-topics.sh --create --topic processed-data --bootstrap-server localhost:9092 --replication-factor 1 --partitions 12. Data Generation

Simulating Numerical Data

Create a Kafka Producer that simulates a stream of numerical data, such as stock prices.

Producer Implementation in Python

from kafka import KafkaProducer

import json

import time

import random

producer = KafkaProducer( bootstrap_servers=['localhost:9092'], value_serializer=lambda v: json.dumps(v).encode('utf-8'))while True:

data = { 'symbol': 'AAPL', 'price': round(random.uniform(100, 200), 2), 'timestamp': int(time.time() * 1000)

}

producer.send('raw-data', data)

print(f"Sent: {data}")

time.sleep(1)

Explanation

Data Fields:

symbol: Stock ticker symbol.

price: Randomly generated stock price.

timestamp: Current time in milliseconds.

Serialization: Data is serialized to JSON before sending to Kafka.

Sending Data: Messages are sent to the raw-data topic every second.

3. Developing the Kafka Streams Application

Project Setup

Language Choice: Java or Scala.

Build Tool: Maven or Gradle.

Dependencies:

org.apache.kafka:kafka-streams

org.apache.kafka:kafka-clients

Logging libraries (e.g., SLF4J)

Initializing the Project

Example using Maven (Java):

<dependencies> <dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-streams</artifactId> <version>2.8.0</version> </dependency> <!-- Additional dependencies --></dependencies>Reading from the raw-data Topic

Properties props = new Properties();props.put(StreamsConfig.APPLICATION_ID_CONFIG, "real-time-analytics");props.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");props.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());props.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass());StreamsBuilder builder = new StreamsBuilder();KStream<String, String> rawStream = builder.stream("raw-data");4. Processing Logic

Computing Rolling Averages

Use windowed aggregations to compute the rolling average over a specified time window.

KTable<Windowed<String>, Double> rollingAvg = rawStream .mapValues(value -> { // Parse JSON and extract price JSONObject json = new JSONObject(value); return json.getDouble("price"); }) .groupByKey() .windowedBy(TimeWindows.of(Duration.ofMinutes(5))) .aggregate( () -> 0.0, (key, newValue, aggValue) -> (aggValue + newValue) / 2, Materialized.with(Serdes.String(), Serdes.Double()) );Anomaly Detection Logic

KStream<String, String> anomalies = rawStream .mapValues(value -> { JSONObject json = new JSONObject(value); double price = json.getDouble("price"); // Logic to compare price with rolling average // If deviation is significant, mark as anomaly return json.put("anomaly", true).toString(); }) .filter((key, value) -> new JSONObject(value).getBoolean("anomaly"));5. Writing to the Output Topic

Serialization and Data Formats

Ensure that the data is serialized correctly when writing back to Kafka.

anomalies.to("processed-data", Produced.with(Serdes.String(), Serdes.String()));Value Serde: Use String Serde or JSON Serde for message values.

Data Format: Maintain consistent JSON structure for ease of downstream processing.

6. Stateful Processing

Using State Stores

State stores in Kafka Streams enable stateful operations like windowed aggregations.

StoreBuilder<KeyValueStore<String, Double>> storeBuilder = Stores.keyValueStoreBuilder( Stores.persistentKeyValueStore("rolling-avg-store"), Serdes.String(), Serdes.Double() );builder.addStateStore(storeBuilder);Materialization: Aggregations are materialized in state stores.

Accessing State: You can query the state store for the current rolling average.

7. Optional Visualization

Setting Up Grafana

Data Source: Use a time-series database like InfluxDB or Prometheus.

Integration:

Write processed data from Kafka to the database.

Use Kafka Connect for seamless data transfer.

Visualizing Data

Create Dashboards: Set up charts to display real-time price data and anomalies.

Customize Alerts: Configure thresholds to trigger visual or email alerts.

Challenges and Solutions

State Store Management

Challenge: Managing state store size and retention.

Solution:

Set appropriate retention periods.

Regularly monitor state store sizes.

Performance Tuning

Challenge: High latency or throughput bottlenecks.

Solution:

Optimize configurations like commit.interval.ms.

Scale application instances horizontally.

Troubleshooting Tips

Serialization Errors: Ensure consistent Serde configurations.

Missing Messages: Check topic configurations and partition assignments.

Application Crashes: Use robust exception handling and logging.

Expert Advice

Best Practices for Kafka Streams Applications

Stateless vs. Stateful Processing: Use stateless operations when possible for simplicity and performance.

Fault Tolerance: Leverage Kafka's built-in fault tolerance by correctly configuring application IDs and state stores.

Testing: Implement unit and integration tests using TopologyTestDriver.

Scalability and Fault Tolerance

Scaling: Increase parallelism by adding more application instances with the same application ID.

Resilience: Kafka Streams handles rebalancing and state migration automatically.

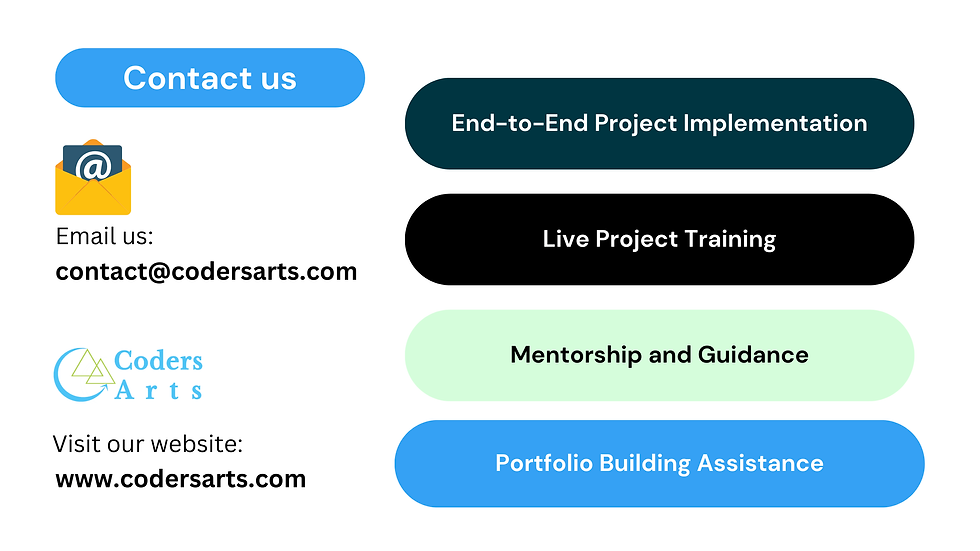

Codersarts Services

At Codersarts, we specialize in providing expert assistance for Kafka Streams assignments and projects.

How We Can Help

One-on-One Tutoring: Personalized guidance to help you understand complex concepts.

Assignment Assistance: Tailored support to meet your specific assignment requirements.

Code Review: Professional feedback to enhance code quality and efficiency.

Project Development: End-to-end development support for your Kafka Streams applications.

Why Choose Codersarts

Industry Experts: Work with professionals experienced in real-time analytics and stream processing.

Customized Solutions: Get solutions that are aligned with your learning goals.

Timely Delivery: We ensure that you meet your deadlines without compromising on quality.

24/7 Support: Our team is available around the clock to address your queries.

Conclusion

Implementing real-time analytics using Kafka Streams is a valuable skill that opens up numerous opportunities in the data engineering field. This assignment guides you through the essential steps of developing a robust stream processing application, from data ingestion to anomaly detection and visualization.

By mastering these concepts, you're well on your way to becoming proficient in building scalable, real-time data processing systems.

Ready to tackle Kafka Streams assignments with confidence? If you need expert assistance or guidance, Codersarts is here to help you succeed.

Get Expert Help Today!

Keywords: Kafka Streams assignment, real-time analytics, stream processing guide, Kafka assignment help, Apache Kafka tutorials

Comments