LLM Output Analysis and Error Evaluation - Get AI Assignment Help

- Codersarts

- Oct 27, 2024

- 3 min read

This assignment aims to develop hands-on experience in fact-checking outputs from Large Language Models (LLMs)and analyzing model accuracy. Using Python and Hugging Face tools, you will implement word overlap and entailment-based methods for fact verification. This practical experience will improve your understanding of AI model evaluation, textual entailment, and error analysis.

Assignment Tasks

Dataset & Tools:

Use Python, PyTorch, and the Hugging Face transformers library. Dependencies are provided in the requirements.txt file. You will analyze factual outputs from LLMs by comparing them with Wikipedia-based passages.

Part 1: Word Overlap for Fact Verification (30 points)

Implement a word overlap-based method to classify facts as "Supported" or "Not Supported." Use bag-of-words, cosine similarity, or Jaccard similarity to measure overlap between retrieved Wikipedia passages and facts generated by the LLM.

Implement Overlap Score:

Tokenize text into words.

Optionally, apply stemming and remove stopwords.

Calculate similarity scores between retrieved passages and facts.

Accuracy Requirement:

Achieve at least 75% accuracy on the dataset. Tune thresholds to improve performance.

Submission:

Include your Python code and a brief report describing:

Tokenization choices

Similarity metric used (e.g., cosine, Jaccard)

Performance metrics (accuracy, precision, recall)

Part 2: Textual Entailment Model (35 points)

Use DeBERTa-v3 (fine-tuned on FEVER, MNLI, and ANLI datasets) to determine if facts are entailed by retrieved passages.

Run the Model:

Use the command:

python factchecking_main.py --mode entailment2. Implementation Steps:

Perform sentence splitting and data cleaning on Wikipedia passages.

Compare facts to sentences within the passages and use a max score approach.

Prune low-overlap passages before entailment to optimize runtime.

Accuracy Requirement:

Achieve 83% accuracy on the dataset.

Report:

Describe:

How you mapped entailment decisions (e.g., threshold, probabilities)

Challenges in using textual entailment models for fact verification

Part 3: Error Analysis (15 points)

Conduct an error analysis on your textual entailment model from Part 2.

Select 10 False Positives (predicted "supported" but actually "not supported") and 10 False Negatives (predicted "not supported" but actually "supported").

Categorize Errors:

Define 2-4 fine-grained error categories (e.g., "Incomplete Wikipedia passage," "Ambiguous fact interpretation").

Detailed Report:

For 3 errors, provide:

Fact text, model prediction, true label

Error category and explanation (1-3 sentences)

Part 4: AI Model Output Evaluation (20 points)

Evaluate the performance of two LLMs (e.g., ChatGPT, Gemini) using creative prompts.

Create Two Prompts:

Prompt 1: A blog post or LinkedIn post relevant to your work.

Prompt 2: A statement of purpose draft with placeholders for your personal details.

Run and Compare Outputs:For each prompt, get responses from two models. Evaluate each response based on at least three aspects:

Factuality, Structure, Tone, Empathy, Coherence, etc.

Report Submission:

For each response, submit:

Prompt text

Model response

3 evaluated aspects with reasoning (e.g., "Tone: The response was appropriately formal but lacked empathy.")

Identify at least two flaws across all responses.

Submission Guidelines

Code Submission: Upload your code files for Parts 1 and 2.

Report Submission: Upload a single PDF or text file covering Parts 3 and 4. Ensure clarity and conciseness.

Grading Criteria

Part 1 (30 points): Word overlap method implementation and accuracy

Part 2 (35 points): Entailment model usage and performance

Part 3 (15 points): Quality of error analysis

Part 4 (20 points): Thoughtful AI model evaluation

Need Help with This Assignment?

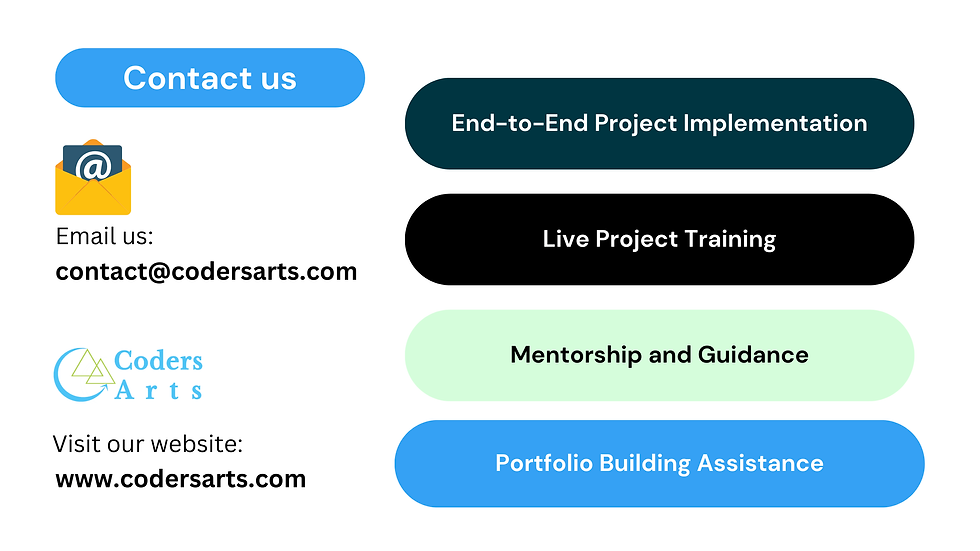

Are you struggling with implementing fact-checking models, working with Hugging Face transformers, or need guidance with textual entailment models? Codersarts offers expert programming help for students working on AI/ML assignments. Our services include:

Assignment Help for AI/ML and NLP Projects

1:1 Live Sessions with Expert Mentors

Custom Code Development and Implementation Support

Error Analysis Guidance

Get Expert Guidance Today

If you need professional assistance with your assignments or custom Python solutions, Codersarts is here to help! Visit Codersarts for:

AI Assignment Help Services

Hugging Face and PyTorch Code Implementation

Project-Based Learning and Guidance

Visit Codersarts Today for specialized AI/ML assignment help and take your learning to the next level: CodersartsAI Help Services

Related Services and Recommended Resources for Students

If you’re working on AI/ML assignments or need guidance with LLM-based projects, these resources and services will help you succeed:

AI assignment help for hands-on projects and coursework

Python code implementation for fact-checking and NLP tasks

Assistance with textual entailment model assignments using Hugging Face

NLP project help for beginners and advanced learners working with Hugging Face models

Solutions for word overlap and fact-checking assignments using custom metrics

Access to Codersarts AI and ML assignment services tailored to student needs

Support with error analysis in NLP models for improving accuracy

Expert help for ChatGPT fact-checking assignments and similar projects

Comments