Transformer Language Modeling Assignment Help

- Codersarts

- Sep 13, 2024

- 7 min read

Updated: Sep 18, 2024

Transformer language models are at the heart of modern AI advancements, especially in natural language processing (NLP) tasks such as machine translation, text summarization, and question-answering systems.

Unlike traditional models, Transformers rely on a powerful mechanism known as self-attention, which enables them to understand the context of each word in a sentence by looking at the entire sequence, rather than processing it one word at a time. This makes Transformers highly effective for handling long-range dependencies and complex language patterns.

Due to their unique architecture, Transformers have revolutionized AI by outperforming previous models in both accuracy and efficiency. However, understanding and implementing them can be incredibly challenging. Their intricate design involves multiple components like self-attention layers, positional encodings, and multi-head attention, which require a deep understanding of neural networks, matrix operations, and optimization techniques.

For students and developers, working with Transformers often requires not only grasping the theory but also mastering the technical details of building, training, and fine-tuning these models. That's where expert help becomes invaluable—having guidance through this complexity ensures that you're able to successfully complete assignments, projects, and concept learning with a solid grasp of Transformer models.

At Codersarts, we provide the specialized support needed to navigate these challenges and achieve success in your academic or professional endeavors.

Services Offered:

Assignment Help: Assistance in completing Transformer-based assignments, including tasks such as building a Transformer from scratch, training models, and debugging.

Project Guidance: Support for students or developers working on Transformer-based projects, from research papers to practical applications like NLP, translation models, and more.

Coursework Assistance: Help with coursework involving Transformer models, from concept understanding to code implementation.

Code Review & Debugging: Expert guidance in debugging, testing, and optimizing Transformer models.

Concept Learning & Mentorship: One-on-one sessions to help students or developers grasp concepts such as self-attention, positional encodings, multi-head attention, etc.

Proof of Concept (POC) Development: Help businesses or students build Transformer-based POCs for various use cases.

Transformer Model Assignment Ideas

Here are some potential task or exercise ideas a teacher could give students as a Transformer model assignment:

Basic Understanding and Implementation

Build a simple Transformer model from scratch: This could involve implementing the encoder-decoder architecture, attention mechanism, and feed-forward networks.

Implement a pre-trained Transformer model: Students could use a pre-trained model like BERT or GPT and fine-tune it for a specific task.

Explain the attention mechanism: Students could explain the concept of attention, how it works in Transformers,and its importance.

Experimentation and Analysis

Experiment with different hyperparameters: Students could try different values for hyperparameters like learning rate, batch size, and number of layers to see how they affect model performance.

Compare different Transformer architectures: Students could compare the performance of different Transformer architectures (e.g., BERT, GPT, T5) on a specific task.

Analyze the attention weights: Students could visualize the attention weights to understand how the model is focusing on different parts of the input sequence.

Practical Applications

Build a text summarization model: Students could use a Transformer model to summarize long texts.

Create a question answering system: Students could develop a system that can answer questions based on a given text.

Build a machine translation model: Students could train a Transformer model to translate text from one language to another.

Advanced Topics

Implement a Transformer-based generative model: Students could explore generative models like GPT-2 or GPT-3.

Develop a Transformer for computer vision: Students could explore how Transformers can be applied to computer vision tasks like image classification or object detection.

Research and implement a novel Transformer architecture: Students could propose and implement a new Transformer architecture.

These are just a few ideas for Transformer model assignments. The specific tasks can be tailored to the students' level of understanding and the goals of the course.

Additional Tasks and Exercises for Transformer Language Modeling Assignments

This involves building and experimenting with Transformer models.

Discover suggested tasks and exercises for Transformer language modeling assignments, designed to help students gain hands-on experience with building, training, and experimenting with Transformer models in AI and machine learning.

1. Transformer Basics Implementation

Task: Implement a simplified Transformer Encoder from scratch.

Objective: Focus on self-attention, residual connections, and linear layers. Avoid using any built-in PyTorch functions like nn.TransformerEncoder and only use basic components such as Linear, Softmax, and activation functions.

Skills Covered: Understanding and implementing Transformer architecture and attention mechanisms from scratch.

2. Character Sequence Classification

Task: Train a Transformer model to predict the number of times a character has occurred before in a sequence (3-class classification).

Objective: Create a model that processes sequences and predicts labels for each character. The students should handle sequence input, label classification, and model training.

Skills Covered: Sequence modeling, Transformer layer building, classification tasks.

3. Implement Positional Encodings

Task: Extend the existing Transformer model to include positional encodings for sequences.

Objective: Discuss how positional encodings work and implement the PositionalEncoding module. Train the model again and compare the performance.

Skills Covered: Positional encoding theory and implementation, training models with sequence context.

4. Build Transformer for Language Modeling

Task: Implement a Transformer for language modeling, predicting the next character in a sequence based on previous characters.

Objective: Use the Transformer encoder for character-level language modeling and predict the next character in a sequence of text.

Skills Covered: Language modeling, using Transformers for sequential data.

5. Visualization of Attention Maps

Task: Visualize the attention weights during training. Identify which parts of the input the model attends to at each step.

Objective: Analyze and interpret attention maps produced by the Transformer model. Ensure the model is focusing on relevant parts of the input sequence.

Skills Covered: Attention mechanism understanding, debugging, and model interpretability.

6. Experiment with Hyperparameters

Task: Experiment with different hyperparameters (e.g., embedding size, learning rate, number of Transformer layers) to achieve optimal model performance.

Objective: Show the impact of different hyperparameter values on model performance and training speed.

Skills Covered: Hyperparameter tuning, performance optimization.

7. Multi-Head Attention Exploration

Task: Implement multi-head self-attention in the Transformer model and observe how it improves model performance compared to single-head attention.

Objective: Compare the performance of a multi-head self-attention model with a single-head attention model.

Skills Covered: Multi-head self-attention implementation, experimentation with model architectures.

8. Sequence Length and Generalization

Task: Train the Transformer on fixed-length sequences (e.g., 20 characters) and then test on slightly longer sequences. Explore if the model can generalize to sequences of different lengths.

Objective: Discuss the limitations of positional encodings and how sequence length affects model generalization.

Skills Covered: Generalization in models, limitations of positional encoding.

9. Causal Masking for Language Models

Task: Implement causal masking in the Transformer model to ensure that each token only attends to previous tokens and not future tokens.

Objective: Understand the significance of causal masking in language modeling tasks and how it prevents the model from "cheating."

Skills Covered: Causal masking, attention mechanisms in language models.

10. Train on Small Dataset and Debug

Task: Train the Transformer on a very small dataset and ensure it overfits. Use the model to debug by inspecting attention maps and model predictions.

Objective: Train the model on a small subset of the data to ensure correctness and check if the model can correctly learn and overfit.

Skills Covered: Debugging training processes, attention visualization, model diagnosis.

These exercises will give students hands-on experience with core concepts of Transformers, self-attention, language modeling, and hyperparameter tuning.

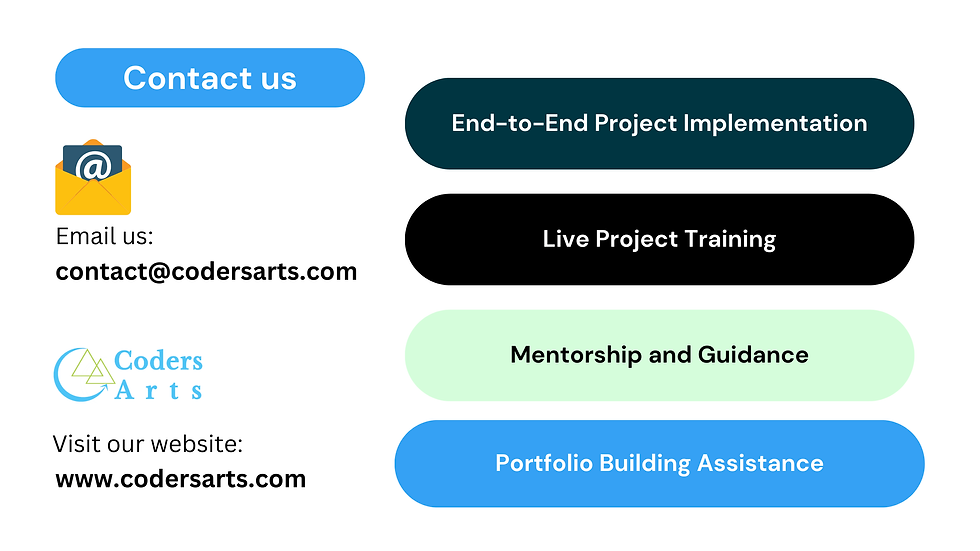

Why Choose Us:

At Codersarts, we pride ourselves on having a team of seasoned AI and machine learning experts with hands-on experience in building and deploying Transformer models. Our professionals have worked on a wide range of projects, from academic research implementations to production-level applications like machine translation and natural language understanding.

What sets us apart is our ability to simplify even the most complex concepts of Transformer architectures. Whether you're a beginner trying to grasp the basics of self-attention and positional encodings, or an advanced learner looking to optimize multi-head attention layers and fine-tune large-scale models, we offer tailored guidance that matches your learning pace and project needs.

With our support, students and developers can overcome the challenges associated with Transformer models, gain confidence in their work, and ensure that their assignments or projects are completed successfully. Our experts are dedicated to not only helping you understand the what and how of Transformers but also the why—enabling you to achieve long-term mastery of this cutting-edge technology.

How It Works:

1. Submit Your Assignment Details:

Start by providing us with the details of your Transformer language modeling assignment or project. Share any specific requirements, goals, and deadlines so that we can fully understand your needs.

2. Get a Free Consultation

After reviewing your submission, our experts will schedule a free consultation with you to discuss your project in detail. During this session, we'll outline how we can help, whether it's providing guidance on implementation, reviewing your code, or offering theoretical explanations.

3. Receive a Structured Assistance Plan

Based on the consultation, we'll create a personalized, step-by-step plan that includes milestones and deliverables. This ensures you receive help that is organized, transparent, and aligned with your timelines.

4. Ongoing Support and Feedback

Once the plan is set, we will work closely with you throughout the process. Whether you need help debugging, understanding complex concepts, or improving your model’s performance, our experts will be available for ongoing support and real-time feedback.

5. Complete with Confidence

At the end of the process, you'll not only have completed your Transformer language modeling assignment or project but will also have a solid understanding of the key concepts and technical implementations. You'll walk away with both the work completed and the knowledge to confidently tackle future challenges.

Let Codersarts be your trusted partner in mastering Transformers and excelling in your AI journey!

Ready to get started with expert help on your Transformer Language Modeling Assignment? Let our team at Codersarts guide you through every step of the process. Whether you need assistance with understanding concepts, building models, or completing projects, we're here to help.

Get personalized support from AI experts

Tailored assistance for your specific needs

Fast turnaround times to meet your deadlines

Don't wait—unlock your potential in AI and machine learning today! Click the button above to submit your assignment details or schedule a free consultation with one of our experts.

Comments